Requirements

- Python 3.10+

- PyTorch >= 2.0

Installation

git clone --recursive git@github.com:Ka2ukiMatsuda/VELA.git

cd VELA

pip install -e .Example Usage

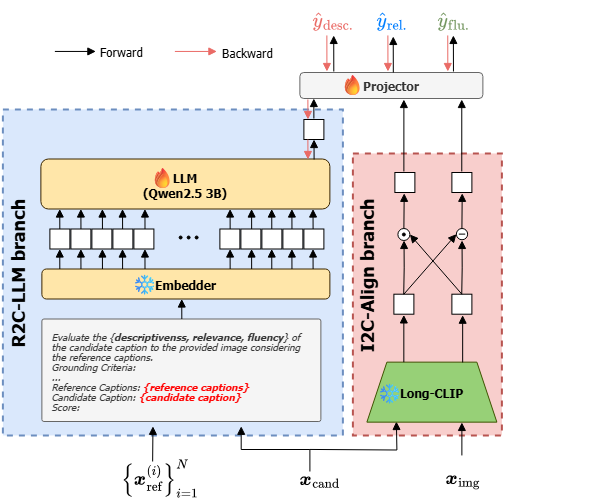

from vela import load_pretrained_model

samples = [

{

"imgid": "sa_1545038.jpg",

"cand": "A damaged facade of a building with bent railings and...",

"refs": ["This is the side of a building. It shows mostly one floor and a little of the floor..."]

},

{

"imgid": "sa_1545118.jpg",

"cand": "The image captures the majestic Krasnoyarsk Fortress in Russia...",

"refs": ["A monolithic monument surrounded by four pinkish stones on each side..."]

}

]

vela = load_pretrained_model()

scores = vela.predict(samples=samples, img_dir="data/images")

print(scores)For complete setup instructions and advanced usage, see the GitHub repository.